OpenAI stresses that the bot does not replace a doctor (Picture: Getty)

Dr ChatGPT will see you now. The artificial intelligence (AI) chatbot is launching a new health mode to give medical advice.

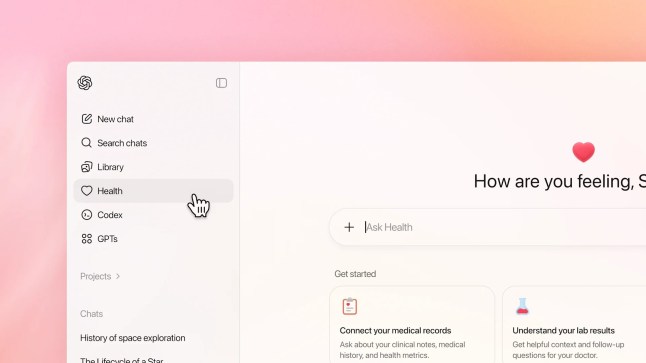

ChatGPT Health,which is not yet available in the UK,is a tab within the app where users can ask health-related questions.

The function was announced yesterday by OpenAI,the tech start-up behind the virtual assistant.

Health can analyse lab results,explain unclear messages from your doctor and provide your clinical history,OpenAI said.

Other usage examples include ‘post-surgery food guides’,health insurance provider picks or outlining drug side effects.

But the company stressed it’s not putting the ‘GP’ in ChatGPT,saying: ‘Health is designed to support,not replace,medical care. It is not intended for diagnosis or treatment.’

The company is encouraging users to connect their medical records and wellness apps,such as Apple Health,to get ‘personalised’ responses.

Patient paperwork will be encrypted,OpenAI added,and conversations won’t be used to train the chatbot.

When linking an app with the bot,ChatGPT says you’ll be in full control of how much access it has.

OpenAI said: ‘The first time you connect an app,we’ll help you understand what types of data may be collected by the third party. And you’re always in control: disconnect an app at any time and it immediately loses access.’

I agree to receive newsletters from Metro

I agree to receive newsletters from Metro

Sign UpSign Up

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply. Your information will be used in line with our Privacy Policy

Many say they seek advice from chatbots due to frustrations with the health system,such as limited GP appointments or lengthy waiting lists.

‘AI gives immediate access to knowledge drawn from enormous datasets,in this case,about health,’ he said.

‘It’s convenient,non-judgmental and free,and the combination of accessibility and privacy is drawing patients in.’

But medical experts have long expressed unease about this,warning it’s the latest version of people Googling health symptoms.

As much as the system can easily pass a medical licensing exam,researchers say it can spit out inaccurate or false information.

One man was taken to hospital with an 18th-century condition after taking ChatGPT’s alleged advice to replace salt in his diet with sodium bromide.

Chatbots also often try to please us by reinforcing what we say,meaning leading questions such as ‘Don’t you think I have the flu?’ could prompt it to agree with you,regardless of the facts,experts warn.

It will be a separate tab (Picture: ChatGPT)

People,especially young adults,are also using AI systems as a form of therapy,to the alarm of psychological experts Metro spoke with.

Sophie McGarry,a solicitor at the medical negligence law firm Patient Claim Line,warned that AI health advice can be ‘very dangerous’.

She told Metro that bots may overdiagnose,underdiagnose or misdiagnose people.

‘This,in turn,could lead to potentially unnecessary stress and worry and could lead people to urgently seek medical attention from their GP,urgent care centres or A&E departments,which are already stretched,adding more unnecessary pressure or could lead to people attempting to treat their AI-diagnosed conditions themselves,’ she said.

‘As a clinical negligence solicitor,I see far too many cases of people’s lives being turned upside down because of misdiagnosis or delays in diagnosis where earlier,appropriate input would have led to a better,often life-changing,sometimes life-saving outcome,’ she said.

‘False reassurances from AI health advice could lead to the same devastating outcomes.’

OpenAI said it worked with more than 260 physicians to provide feedback on health model outputs over the last two years,including teaching it to recommend follow-ups with a doctor when needed and not oversimplify.

United News - unews.co.za